About

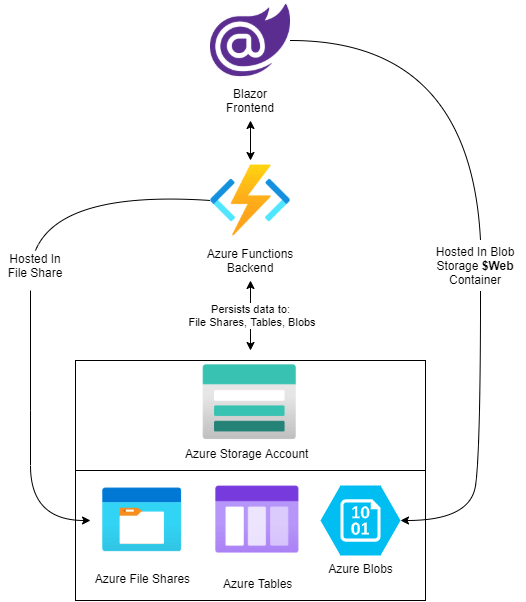

In this post, I will show you how to create a Blazor C# WebAssembly app with a serverless backend utilizing Azure Functions. The data will be persisted to Azure Tables, Blobs and Files Shares in the Azure Storage Account which will also be used to host the backend functions and frontend as a static web page. Finally, we’ll make a CI/CD pipeline to deploy our app.

I sometimes use this approach for creating full-stack apps as it’s much cheaper than paying for an App Service and an SQL database in Azure. Even though you’ll probably want to go the standard route and just use an ASP.NET backed most of the time I think this other approach can work well if your app doesn’t need a proper login/authentication system, doesn’t have too many calls to the backend and only needs some basic persistence to NoSQL database or blob storage.

Working with Azure storage accounts:

1. Working With Azure Storage Account File Shares In C#

2. Working With Azure Storage Account Tables In C#

3. Working With Azure Storage Account Blobs In C#

4. Working With Azure Storage Account Queues In C#

Azure functions:

1. Getting Started With C# Azure Functions

2. Azure DevOps CI/CD Pipeline For Azure Functions

Blazor:

1. Blazor File Uploads/Downloads

Fullstack App Project Code:

I will put the main code of the Blazor Frontend and the Azure Functions backend into this post. However, I think you might find it easier to just get the entire project repository from GitHub.

Blazor App

Blazor Frontend Code:

@page "/"

@inject IJSRuntime JSRuntime

@inject HttpClient client

@inject NavigationManager NavigationManager

@using System.Net.Http;

@using System.Text.Json;

@using System.IO;

@using System.Text;

<PageTitle>Files</PageTitle>

<div style="margin-bottom: 30px;">

<h4>Files Space ID</h4>

<input type="text" @onchange="fileSpaceChanged" value="@fileSpace"></input><button @onclick="() => copyToClipboard(fileSpace)">Copy File Space ID</button>

</div>

<div style="margin-bottom: 30px;">

<h4>Upload File</h4>

<InputFile OnChange="onFileChanged" multiple></InputFile>

</div>

<div style="border: solid 1px black;">

<ul style="margin: 0;">

@if (files.Count() == 0)

{

<li>no files yet</li>

}

@foreach (var file in files)

{

<li>

<div>

<span>@file.Name</span>

<button @onclick="() => downloadFile(file)">Download</button>

<button @onclick="() => deleteFile(file)">Delete</button>

<button @onclick="() => copyToClipboard(file.Link)">Copy Link</button>

<span>@file.TimeStamp.ToShortDateString() @file.TimeStamp.ToShortTimeString()</span>

</div>

</li>

}

</ul>

</div>

@code

{

#region Variables ///////////////////////////////////////////////////////////////

private string fileSpace = "";

List<CloudFile> files = new List<CloudFile>();

#endregion //////////////////////////////////////////////////////////////////////

#region Models ///////////////////////////////////////////////////////////////////

class CloudFile

{

public CloudFile(string name, string fileSpace, string fileID, DateTime timeStamp, string link)

{

Name = name;

FileSpace = fileSpace;

FileID = fileID;

TimeStamp = timeStamp;

Link = link;

}

public string Name { get; set; }

public string FileSpace { get; set; }

public string FileID { get; set; }

public DateTime TimeStamp { get; set; }

public string Link { get; set; }

}

#endregion ///////////////////////////////////////////////////////////////////////

#region Initialization //////////////////////////////////////////////////////////

protected override async Task OnInitializedAsync()

{

fileSpace = Guid.NewGuid().ToString();

}

#endregion //////////////////////////////////////////////////////////////////////

#region Events //////////////////////////////////////////////////////////////////

private async Task downloadFile(CloudFile file)

{

byte[] downloadFile = await client.GetByteArrayAsync(file.Link);

await JSRuntime.InvokeVoidAsync("DownloadFile", file.Name, "application/octet-stream", downloadFile);

}

private async Task deleteFile(CloudFile file)

{

await deleteBlobFile(file.FileID);

}

private async Task onFileChanged(InputFileChangeEventArgs e)

{

using (var ms = new MemoryStream())

{

//Foreach file in the selected files

foreach (var file in e.GetMultipleFiles(e.FileCount))

{

//Only one file is supported. If multiple files present the last file overwrites the previous one.

Stream stream = file.OpenReadStream();

bool success = await uploadFile(fileSpace, file.Name, stream);

}

}

}

private async Task fileSpaceChanged(ChangeEventArgs eventArgs)

{

fileSpace = eventArgs.Value.ToString();

await getFiles(fileSpace);

}

private async Task copyToClipboard(string textToCopy)

{

await JSRuntime.InvokeVoidAsync("CopyToClipboard", textToCopy);

}

#endregion ///////////////////////////////////////////////////////////////////////

#region Persistence //////////////////////////////////////////////////////////////

string backendBaseURL = "http://localhost:7015/api";

private async Task<bool> deleteBlobFile(string fileId)

{

var request = new HttpRequestMessage(HttpMethod.Delete, backendBaseURL + "/DeleteFile")

{

Headers = { { "FileSpace", fileSpace }, { "FileId", fileId } }

};

var response = await client.SendAsync(request);

if (response.IsSuccessStatusCode)

{

var content = await response.Content.ReadAsStringAsync();

if (content == "true")

{

await getFiles(fileSpace);

return true;

}

}

return false;

}

private async Task<bool> uploadFile(string fileSpace, string fileName, Stream stream)

{

var request = new HttpRequestMessage(HttpMethod.Post, backendBaseURL + "/UploadFiles")

{

Content = new StreamContent(stream),

Headers = { { "FileSpace", fileSpace }, { "FileName" , fileName } }

};

var response = await client.SendAsync(request);

if (response.IsSuccessStatusCode)

{

var content = await response.Content.ReadAsStringAsync();

if (content == "true")

{

await getFiles(fileSpace);

return true;

}

}

return false;

}

private async Task getFiles(string fileSpace)

{

var request = new HttpRequestMessage(HttpMethod.Get, backendBaseURL + "/GetFiles")

{

Headers = { { "FileSpace", fileSpace } }

};

var response = await client.SendAsync(request);

if (response.IsSuccessStatusCode)

{

var content = await response.Content.ReadAsStringAsync();

var receivedFiles = JsonSerializer.Deserialize<List<CloudFile>>(content);

if (receivedFiles is not null)

files = receivedFiles;

}

}

#endregion ////////////////////////////////////////////////////////////////////////

} JS Interop Code:

function DownloadFile(filename, contentType, content) {

//Create the URL.

const file = new File([content], filename, { type: contentType });

const exportUrl = URL.createObjectURL(file);

//Creates <a> element and clicks on it programmatically.

const a = document.createElement("a");

document.body.appendChild(a);

a.href = exportUrl;

a.download = filename;

a.target = "_self";

a.click();

//Remove URL object after clicking on it.

URL.revokeObjectURL(exportUrl);

}

function CopyToClipboard(text) {

navigator.clipboard.writeText(text).then(function () {

console.log('Text copied to clipboard');

}, function (err) {

console.error('Could not copy text: ', err);

});

}; Azure Functions Backend Code:

using System;

using System.IO;

using System.Threading.Tasks;

using System.Net.Http;

using System.Linq;

using System.Collections.Generic;

using System.Text.Json;

using System.Net;

using Microsoft.Extensions.Logging;

using Microsoft.Azure.Functions.Worker;

using Microsoft.Azure.Functions.Worker.Http;

using Azure;

using Azure.Data.Tables;

using Azure.Storage.Blobs;

namespace BlazorBackend

{

public class BlazorBackendEndpoints

{

private readonly ILogger _logger;

private readonly HttpClient _client;

public BlazorBackendEndpoints(ILoggerFactory loggerFactory, IHttpClientFactory httpClientFactory)

{

_logger = loggerFactory.CreateLogger<BlazorBackendEndpoints>();

_client = httpClientFactory.CreateClient();

}

#region Endpoints //////////////////////////////////////////////////////////////

[Function("GetFiles")]

public async Task<HttpResponseData> GetFiles([HttpTrigger(AuthorizationLevel.Function, "Get")] HttpRequestData req)

{

req.Headers.TryGetValues("FileSpace", out var fileSpaceHeader);

string fileSpace = fileSpaceHeader.First().ToString();

var files = await getFiles(fileSpace);

var serializedFiles = JsonSerializer.Serialize(files);

//Create and return a response.

var response = req.CreateResponse(HttpStatusCode.OK);

response.Headers.Add("Content-Type", "text/plain; charset=utf-8");

response.WriteString(serializedFiles);

return response;

}

[Function("UploadFiles")]

public async Task<HttpResponseData> UploadFiles([HttpTrigger(AuthorizationLevel.Function, "post")] HttpRequestData req)

{

Stream fileContents = req.Body;

req.Headers.TryGetValues("FileSpace", out var fileSpaceHeader);

string fileSpace = fileSpaceHeader.First().ToString();

req.Headers.TryGetValues("FileName", out var fileNameHeader);

string fileName = fileNameHeader.First().ToString();

string fileID = Guid.NewGuid().ToString();

await uploadFile(fileID, fileName, fileSpace, fileContents);

//Create and return a response.

var response = req.CreateResponse(HttpStatusCode.OK);

response.Headers.Add("Content-Type", "text/plain; charset=utf-8");

response.WriteString("true");

return response;

}

[Function("DeleteFile")]

public async Task<HttpResponseData> DeleteFile([HttpTrigger(AuthorizationLevel.Function, "delete")] HttpRequestData req)

{

req.Headers.TryGetValues("FileSpace", out var fileSpaceHeader);

string fileSpace = fileSpaceHeader.First().ToString();

req.Headers.TryGetValues("FileId", out var FileIdHeader);

string FileId = FileIdHeader.First().ToString();

await deleteFile(fileSpace, FileId);

//Create and return a response.

var response = req.CreateResponse(HttpStatusCode.OK);

response.Headers.Add("Content-Type", "text/plain; charset=utf-8");

response.WriteString("true");

return response;

}

#endregion //////////////////////////////////////////////////////////////////////

#region Methods /////////////////////////////////////////////////////////////////

private static async Task uploadFile(string fileID, string fileName, string fileSpace, Stream fileContents)

{

await blobUpload(fileID, fileContents);

await addTableFileEntry(fileID, fileName, fileSpace);

}

private static async Task blobUpload(string blobName, Stream stream)

{

string connectionString = Environment.GetEnvironmentVariable("AzureWebJobsStorage");

string containerName = "files-container";

BlobServiceClient blobServiceClient = new BlobServiceClient(connectionString);

BlobContainerClient containerClient = blobServiceClient.GetBlobContainerClient(containerName);

await containerClient.CreateIfNotExistsAsync();

BlobClient blobClient = containerClient.GetBlobClient(blobName);

await blobClient.UploadAsync(stream);

}

private static async Task addTableFileEntry(string fileID, string fileName, string fileSpace)

{

string connectionString = Environment.GetEnvironmentVariable("AzureWebJobsStorage");

string tableName = "FilesTable";

TableClient tableClient = new TableClient(connectionString, tableName);

tableClient.CreateIfNotExists();

//Create a new entity(table row).

FilesTable entity = new FilesTable

{

PartitionKey = "partition1",

RowKey = fileID,

FileName = fileName,

FileSpace = fileSpace

};

tableClient.AddEntity(entity);

}

private static async Task<List<CloudFile>> getFiles(string fileSpace)

{

string connectionString = Environment.GetEnvironmentVariable("AzureWebJobsStorage");

string tableName = "FilesTable";

TableClient tableClient = new TableClient(connectionString, tableName);

Pageable<FilesTable> entities = tableClient.Query<FilesTable>(filter: $"FileSpace eq '{fileSpace}'"); //This will query all the rows from the table where the partition key is 'partition1'.

List<CloudFile> files = new List<CloudFile>();

foreach (FilesTable entity in entities)

{

string link = await getBlobUri(entity.RowKey);

files.Add(new CloudFile(

entity.FileName,

entity.FileSpace,

entity.RowKey,

entity.Timestamp.Value.DateTime,

link

));

}

return files;

}

private static async Task<string> getBlobUri(string blobName)

{

string connectionString = Environment.GetEnvironmentVariable("AzureWebJobsStorage");

string containerName = "files-container";

BlobServiceClient blobServiceClient = new BlobServiceClient(connectionString);

BlobContainerClient containerClient = blobServiceClient.GetBlobContainerClient(containerName);

BlobClient blobClient = containerClient.GetBlobClient(blobName);

//Check if the blob exists.

if (!blobClient.Exists())

return "";

string sasURI = blobClient.GenerateSasUri(Azure.Storage.Sas.BlobSasPermissions.Read, DateTimeOffset.Now.AddDays(1)).AbsoluteUri;

return sasURI;

}

private static async Task deleteFile(string fileSpace, string fileId)

{

await deleteBlob(fileSpace, fileId);

await deleteTableEntry(fileSpace, fileId);

}

private static async Task deleteBlob(string fileSpace, string fileId)

{

string connectionString = Environment.GetEnvironmentVariable("AzureWebJobsStorage");

string containerName = "files-container";

BlobServiceClient blobServiceClient = new BlobServiceClient(connectionString);

BlobContainerClient containerClient = blobServiceClient.GetBlobContainerClient(containerName);

BlobClient blobClient = containerClient.GetBlobClient(fileId);

if (!blobClient.Exists())

return;

else

blobClient.DeleteIfExists();

}

private static async Task deleteTableEntry(string fileSpace, string fileId)

{

string connectionString = Environment.GetEnvironmentVariable("AzureWebJobsStorage");

string tableName = "FilesTable";

TableClient tableClient = new TableClient(connectionString, tableName);

Pageable<FilesTable> entities = tableClient.Query<FilesTable>(filter: $"FileSpace eq '{fileSpace}' and RowKey eq '{fileId}'");

foreach (FilesTable entity in entities)

{

tableClient.DeleteEntity(entity);

}

}

#endregion //////////////////////////////////////////////////////////////////////

#region Models //////////////////////////////////////////////////////////////////

public class FilesTable : ITableEntity

{

//Required properties

public string PartitionKey { get; set; }

public string RowKey { get; set; }

public DateTimeOffset? Timestamp { get; set; }

public ETag ETag { get; set; }

//Custom properties

public string FileName { get; set; }

public string FileSpace { get; set; }

}

class CloudFile

{

public CloudFile(string name, string fileSpace, string fileID, DateTime timeStamp, string link)

{

Name = name;

FileSpace = fileSpace;

FileID = fileID;

TimeStamp = timeStamp;

Link = link;

}

public string Name { get; set; }

public string FileSpace { get; set; }

public string FileID { get; set; }

public DateTime TimeStamp { get; set; }

public string Link { get; set; }

}

#endregion //////////////////////////////////////////////////////////////////////

}

}

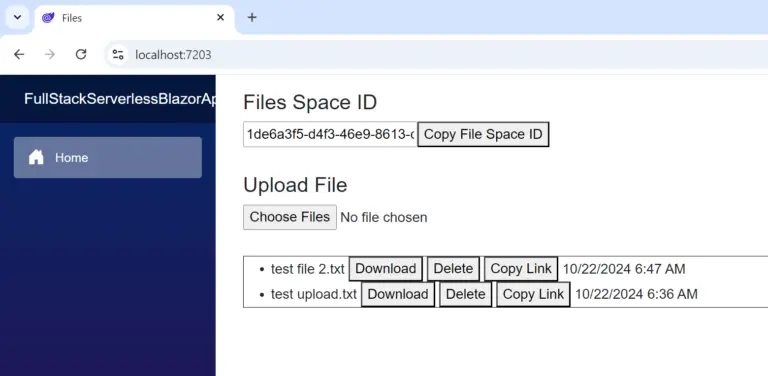

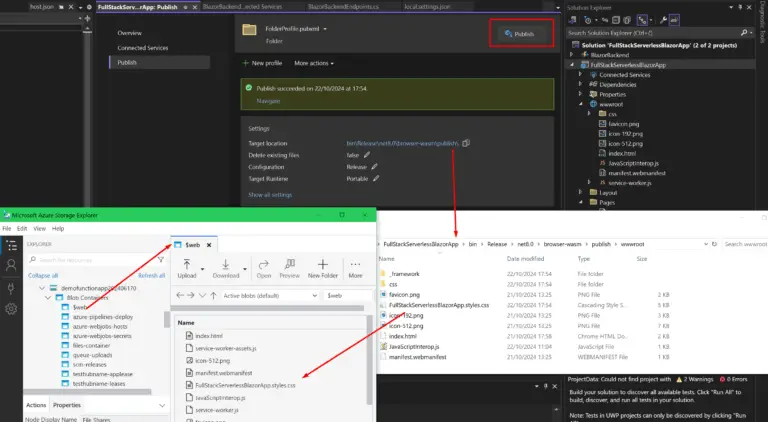

Result:

Hosting

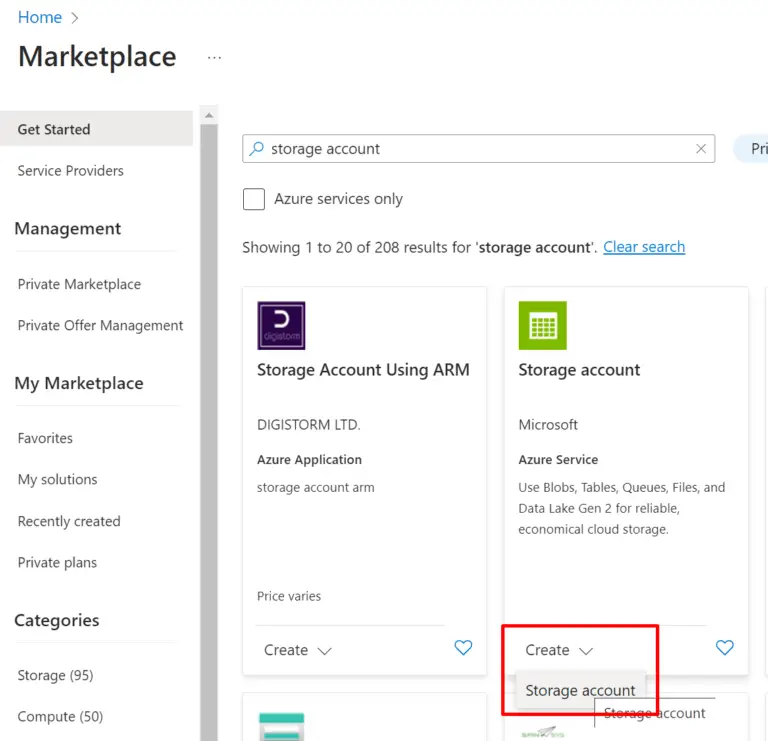

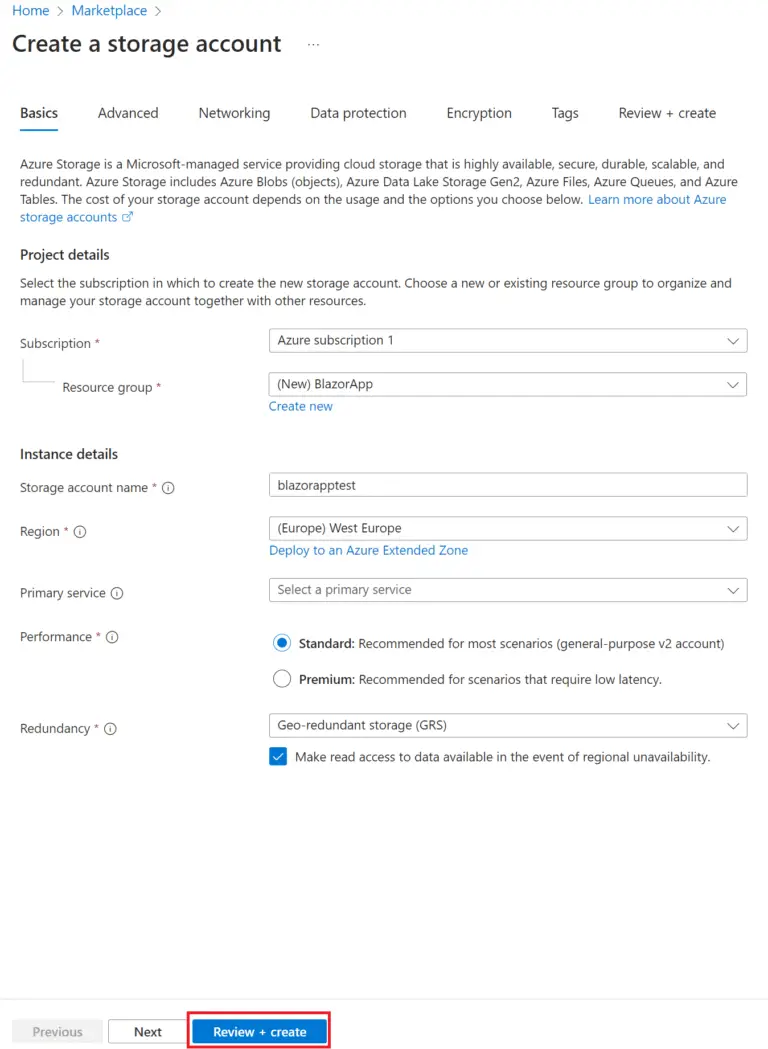

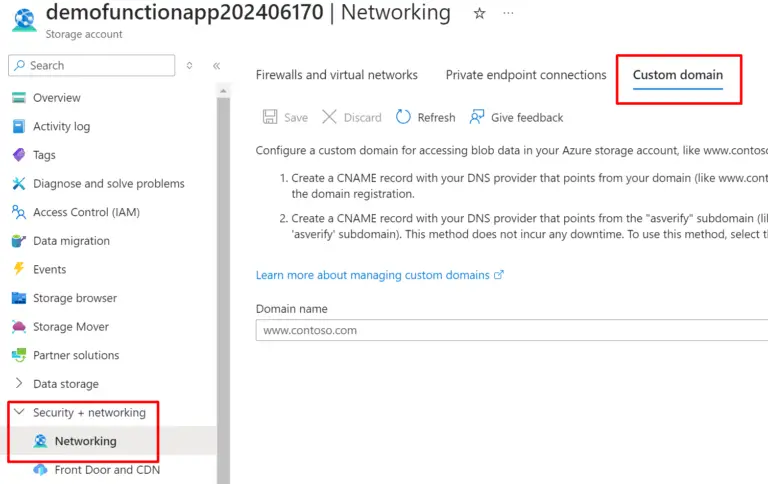

Let’s create the storage account we’ll use for hosting our front end in the portal(must be V2, if not you can easily upgrade it in the settings).

Note: If you will keep it publicly accessible you can also reuse the storage account used to host your Azure Functions backend.

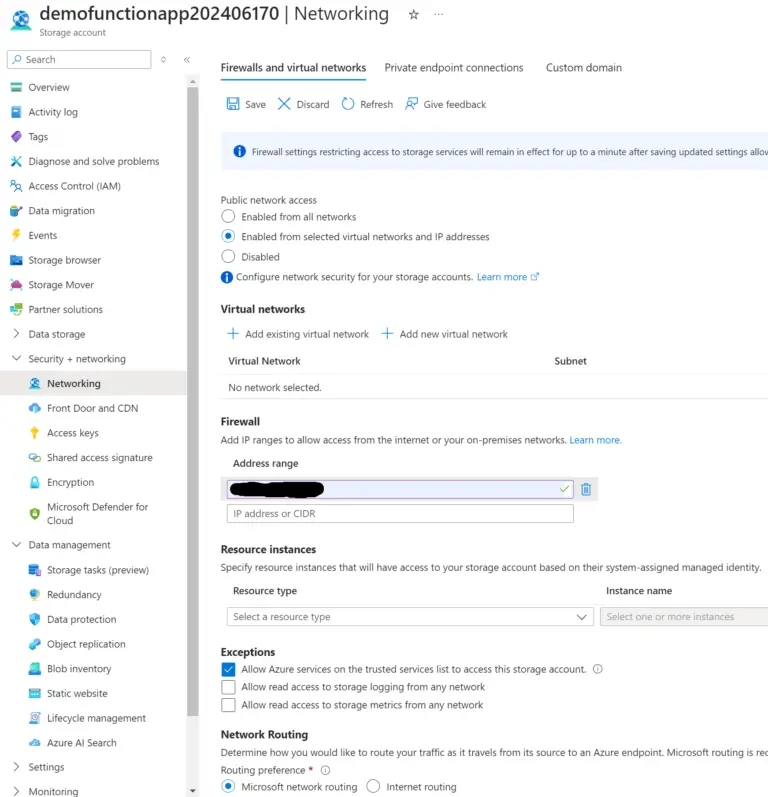

Note: If you limit the access to your app to only certain IPs you can still make it work by adding the entire IP pool of your Azure Function to the storage account IP whitelist.

Access Restriction

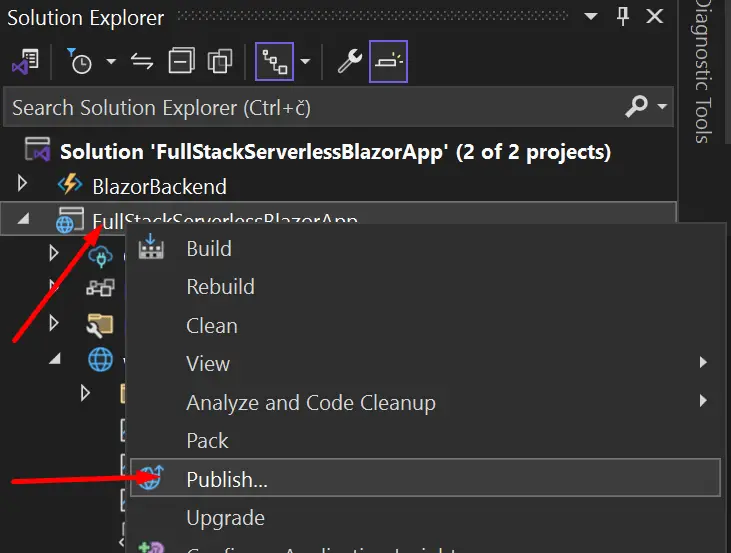

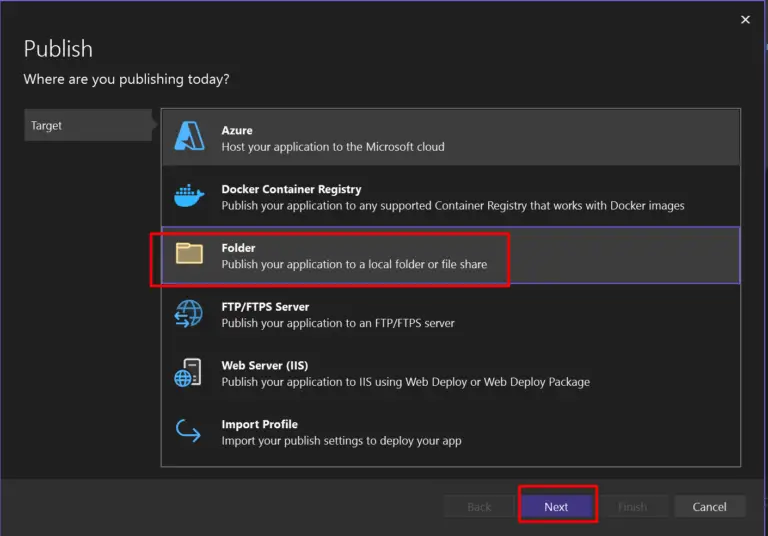

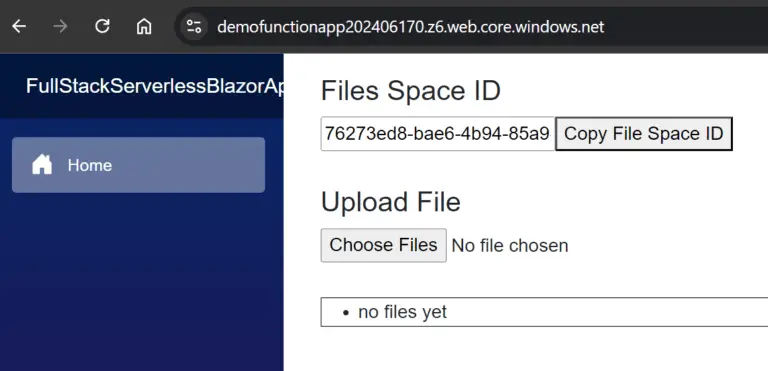

Manual Deployment

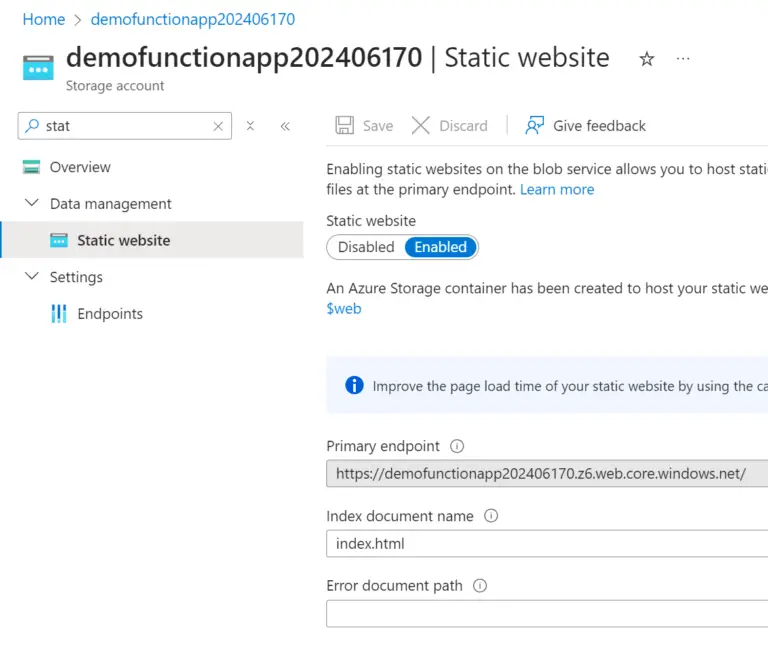

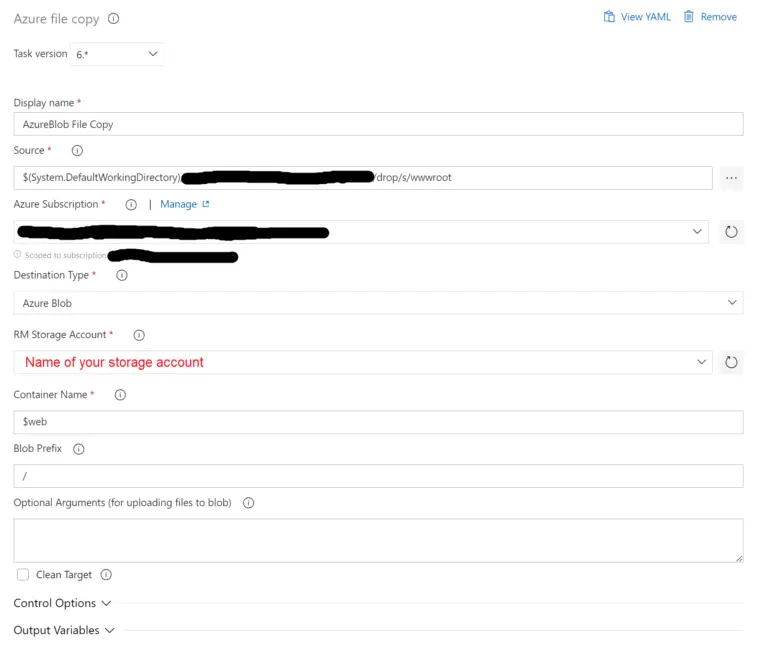

For now, I’ll just manually publish the Blazor app to a folder and copy over the files to the storage account $web blob container. In the next step, I’ll show you how to set up a CI/CD pipeline for the front end.

Note: If you are deploying this actual project don’t forget to set the backendBaseURL to the base URL of your Azure function.

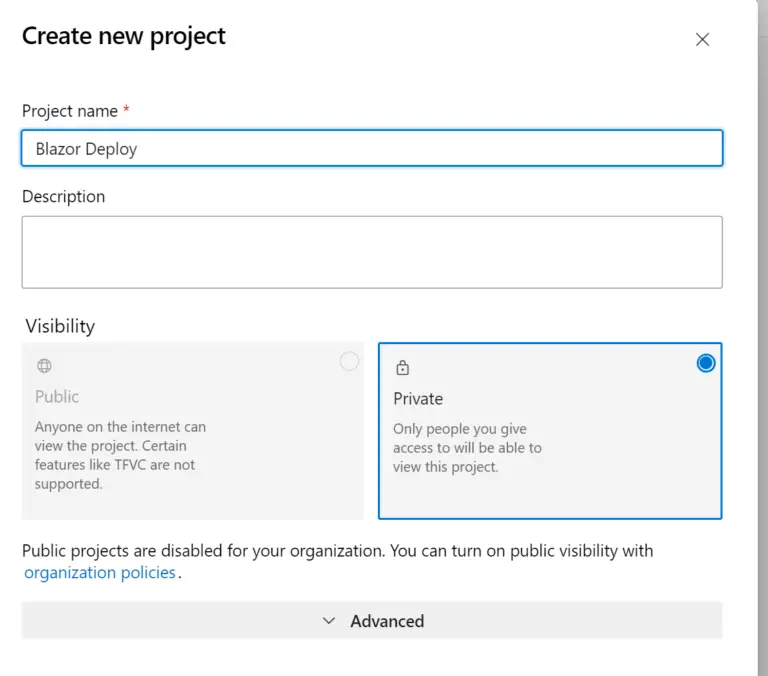

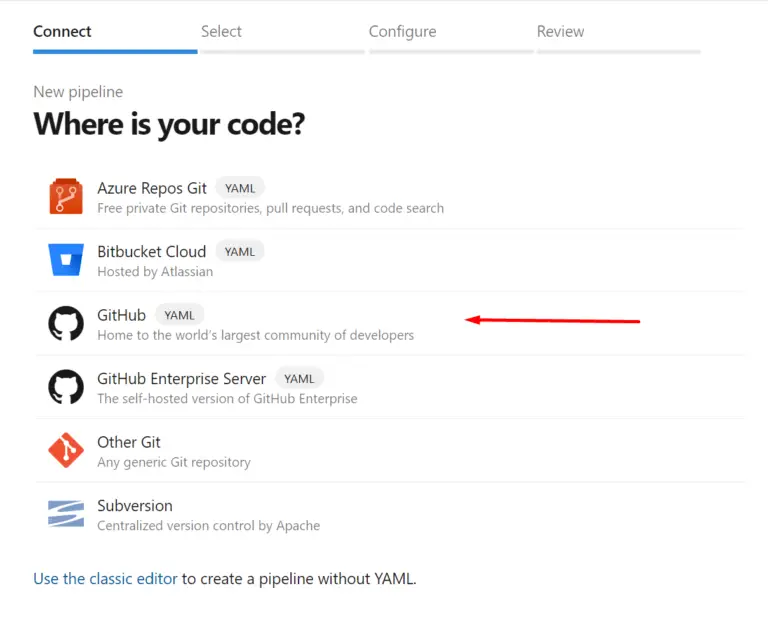

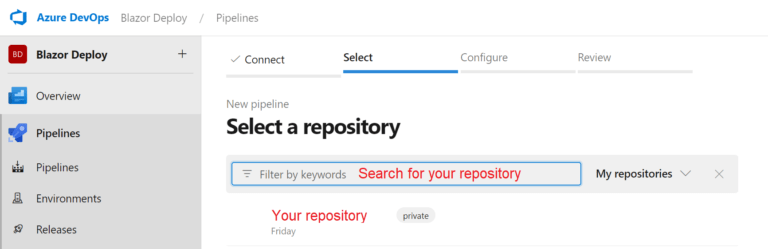

CI/CD Pipeline

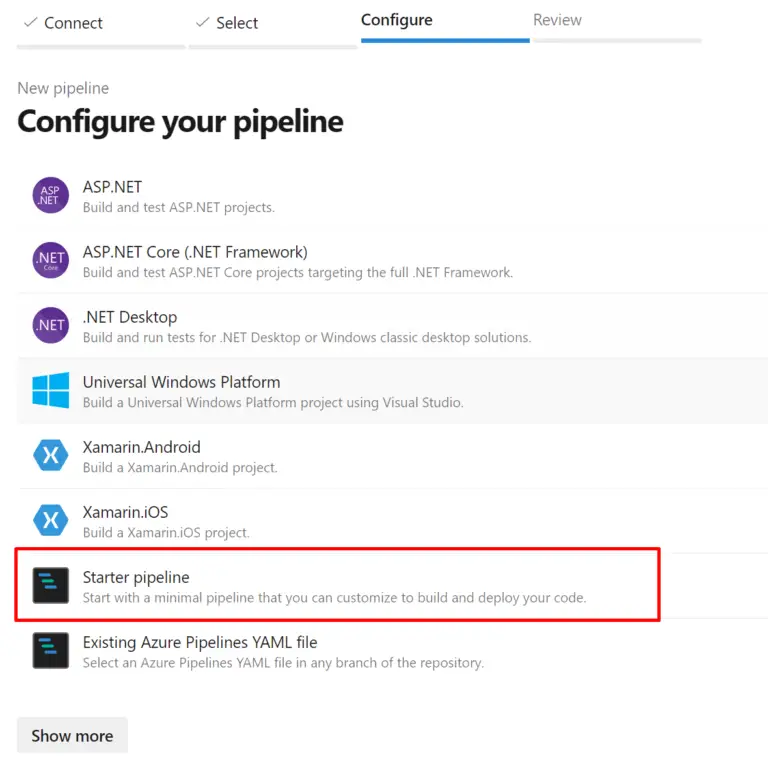

Build Pipeline

trigger:

- master

pool:

vmImage: ubuntu-latest

variables:

buildConfiguration: 'Release'

steps:

- task: UseDotNet@2

inputs:

version: '7.x'

- task: DotNetCoreCLI@2

displayName: Publish

inputs:

command: publish

publishWebProjects: True

arguments: '--configuration $(BuildConfiguration) --output $(build.artifactstagingdirectory)'

zipAfterPublish: false

- task: PublishBuildArtifacts@1

inputs:

PathtoPublish: '$(Build.ArtifactStagingDirectory)'

ArtifactName: 'drop'

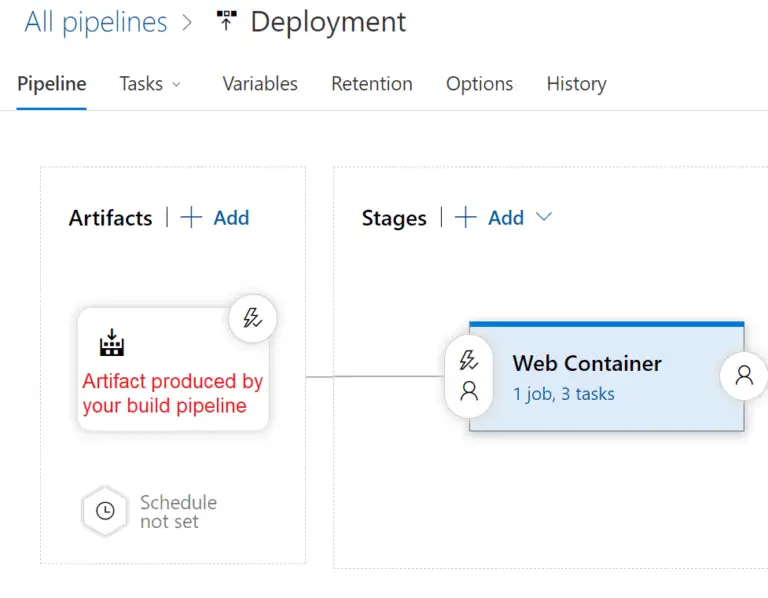

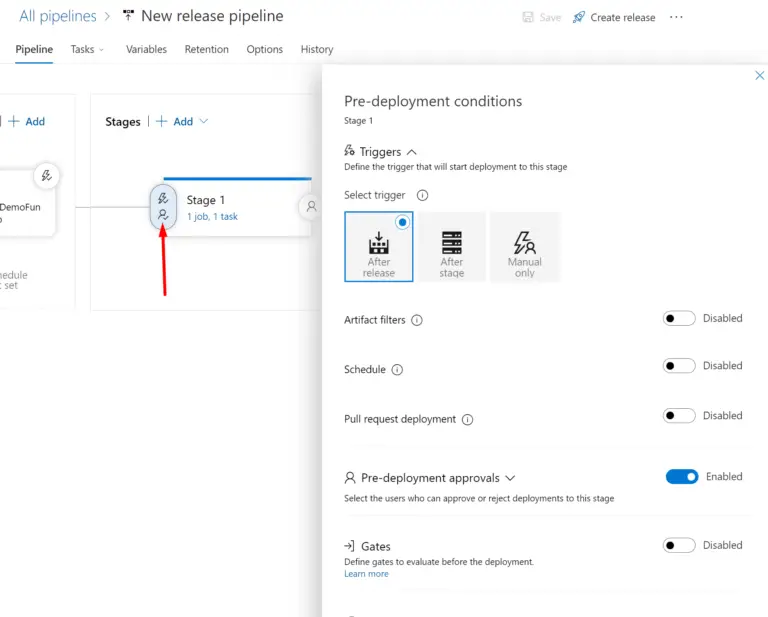

publishLocation: 'Container' Release Pipeline

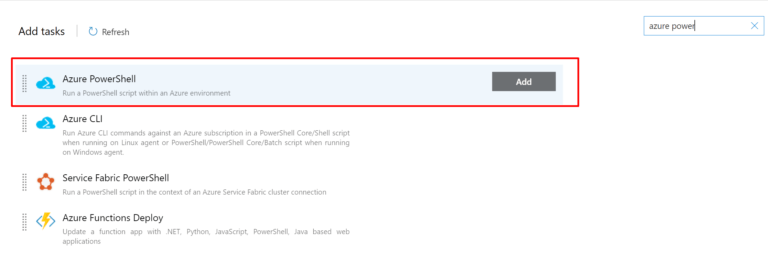

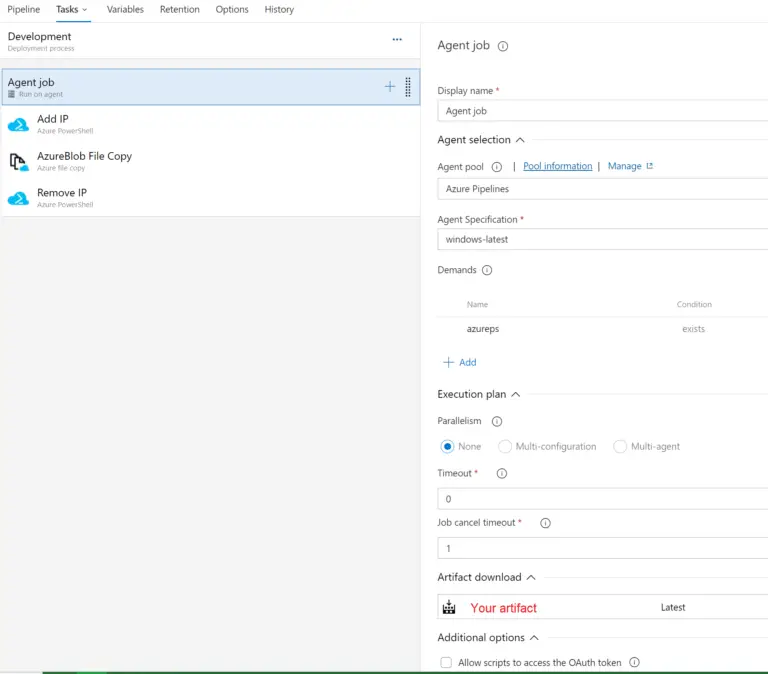

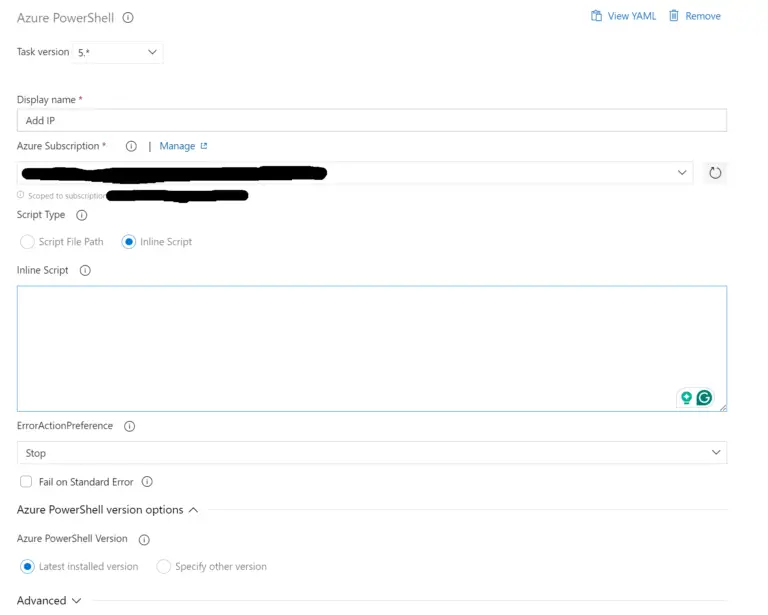

Open up the stage to configure the deployment. Here we’ll search for and add three tasks: “Azure PowerShell”, “AzureBlob File Copy” and another “Azure PowerShell”.

Note: You only need the “Azure PowerShell” tasks if you have restricted IP access to the storage account. If you have left your storage account as public you only need to “AzureBlob File Copy” task.

# Add the agent IP to the storage firewall.

$environment = "production";

# You can have multiple environments.

$resourceGroupNames = @{

production = "name of the resource group your storage account is in"

}

$storageAccountNames = @{

production = "name of your storage account"

}

# Get the IP of the current build agent.

$ipaddress =(Invoke-WebRequest http://ipinfo.io/ip).Content

Write-Host "Agent IP address: " $ipaddress

# Allow access to the storage account from the build agent IP.

Write-Host "Adding IP $ipaddress to the firewall ..."

Add-AzStorageAccountNetworkRule -ResourceGroupName $resourceGroupNames[$environment] -Name $storageAccountNames[$environment] -IPAddressOrRange $ipaddress

Write-Host "Agent IP address added."

Write-Host "Waiting for 30s to make sure the changes are applied."

Start-Sleep -Seconds 30

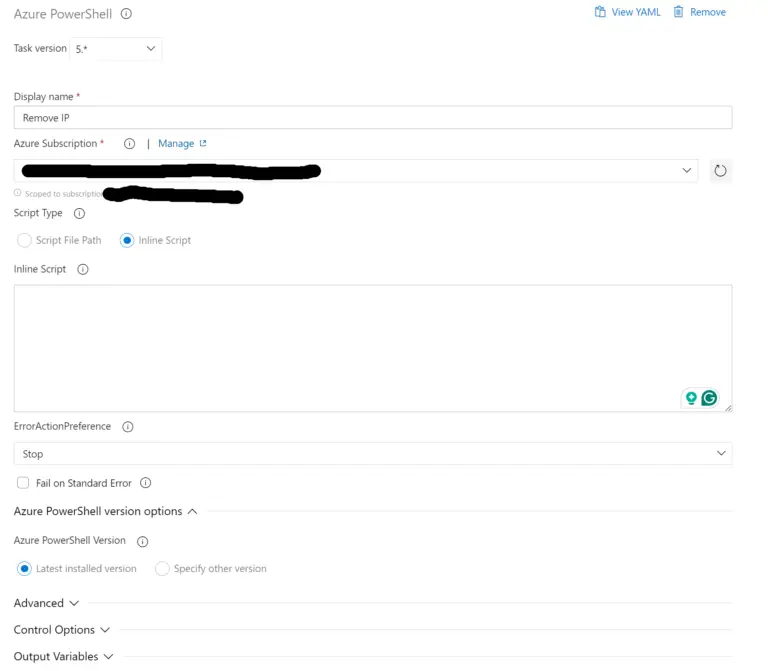

Write-Host "PS script is done." # Remove the agent IP to the storage firewall.

$environment = "production";

$resourceGroupNames = @{

production = "name of the resource group your storage account is in"

}

$storageAccountNames = @{

production = "name of your storage account"

}

# All allowed IPs will get removed except the ones specified here.

$existingIpaddress = "ip addres you don't want removed", "ip addres you don't want removed"; #else leave empty

# Remove the IP address from the storage account.

$rules = (Get-AzStorageAccountNetworkRuleSet $resourceGroupNames[$environment] -Name $storageAccountNames[$environment]).IpRules

foreach ($rule in $rules)

{

if($existingIpaddress -notcontains $rule.ipAddressOrRange)

{

# Remove the IP Address from the firewall.

Remove-AzStorageAccountNetworkRule -ResourceGroupName $resourceGroupNames[$environment] -Name $storageAccountNames[$environment] -IPAddressOrRange $rule.ipAddressOrRange

Write-Host "IP Address: " $rule.ipAddressOrRange " has been removed from the firewall"

}

}